What doesn’t exist can’t be scanned, and altering photogrammetry data without optimising it first sounds like a nightmare. Photogrammetry is great, but a lot of environments we see in video games simply do not exist on Earth.

In a video game context, “art that just works” means that it works according to game design, and for the primary purpose of supporting gameplay. Call me narrow-minded, but it does sound a little bit like science-fiction to me. Similarly, if I’d want to manually paint that dense a geometry, Substance Painter will also have to be able to let me do that. I’m afraid that isn’t going to happen for a while. I would be very happy to see for example, Maya’s viewport dealing with 100 million polygons, especially when textured and lit in real-time. As long as they don’t want to assemble a larger piece of scenery outside of Unreal, of course. Millions and millions of triangles even the smallest detail used as geometry and not a normal map on top of a low-poly model? That is great news for environment artists. The new system Nanite, that is a new way of rendering geometry seems to be quite capable of dealing with unoptimised models. Art that just works,” say the guys in the introduction, and while it sounds very nice, it also sounds suspiciously generic and obscure. “I just want to be able to import my ZBrush model, my photogrammetry scan, my CAD data, without wasting any time optimising, creating LOD’s, or even lowering the quality to make it hit framerate. But of course, it is far not so simple, so here’s what I think. While the Tomb Raider-esque presentation was very engaging and fun to watch, I couldn’t help but feel some level of uncertainty about how much this technology is going to change the 3D artist’s job.

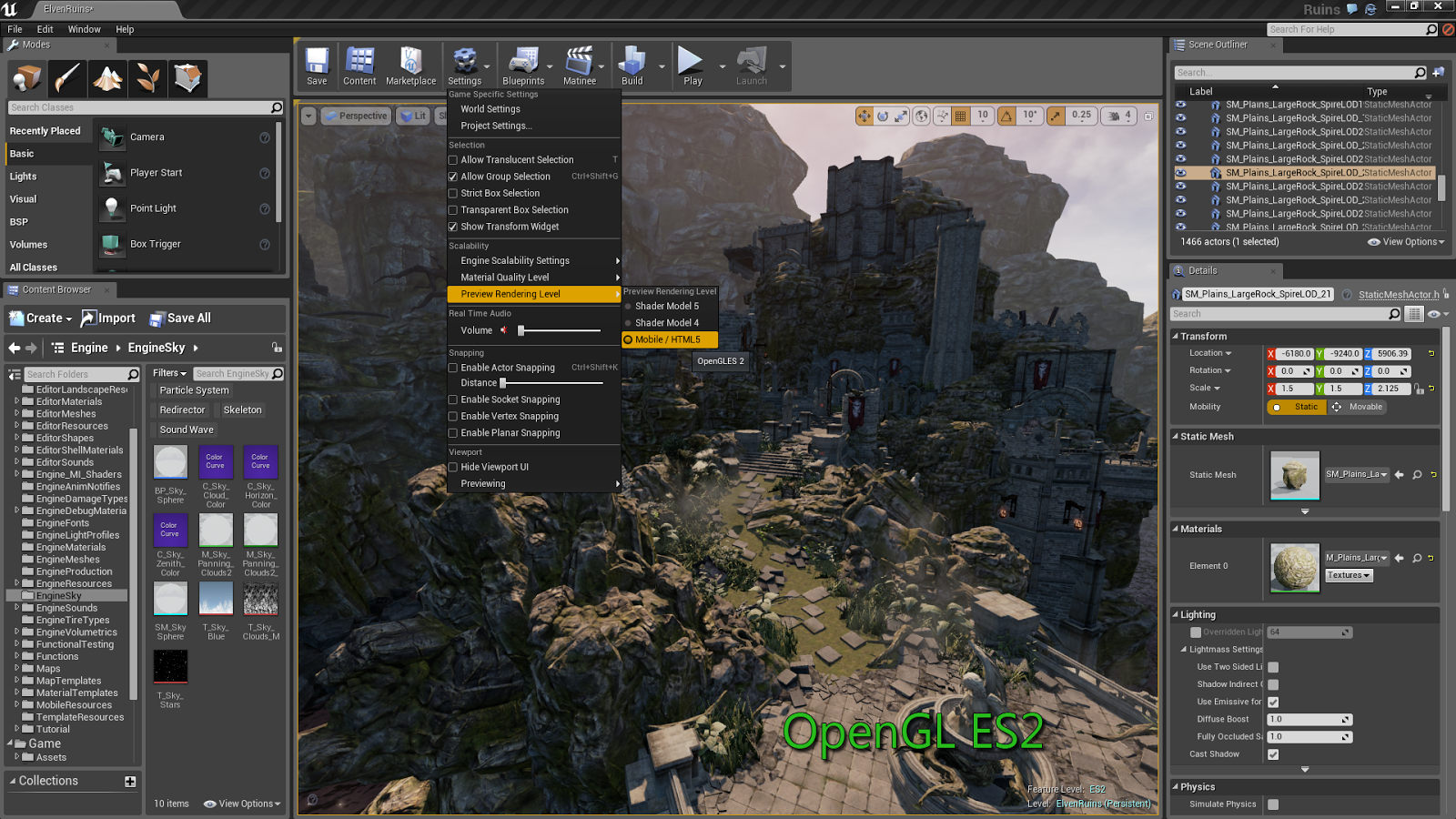

Epic’s jawdropping Unreal Engine 5 tech demo, “Lumen in the Land of Nanite” has been released just recently, granting a sneak peak into next-gen video game graphics.

0 kommentar(er)

0 kommentar(er)